The EU AI Act 2024: A Practical Guide for Product Consultation and E-Commerce

A comprehensive guide to the EU AI Act 2024 focusing on product consultation and e-commerce. Learn about risk levels, compliance, and how to use transparency to build trust.

Introduction: What is the EU AI Act and Why Should E-Commerce Care?

The EU AI Act, officially known as the Artificial Intelligence Act, is a groundbreaking legislative project by the European Union. As the world's first comprehensive regulation for Artificial Intelligence (AI), the Act aims to regulate and promote the development and use of AI systems in Europe. In December 2023, a provisional agreement was reached on the EU AI Act, marking a significant milestone in shaping Europe's digital future.

For online retailers and software providers, this law is more than just red tape. The EU AI Act pursues the primary goal of creating a balance between innovation and safety. It ensures that AI systems respect fundamental rights and EU values, while simultaneously promoting technological development and Europe's competitiveness. The necessity for this regulation arises from the rapid progress of AI technologies and their increasing importance in all areas of life, specifically in how products are sold and recommended online.

The current status of the EU AI Act is in the final phase of the legislative process. Following the agreement in December 2023, the Act is now going through the final formal steps before officially entering into force. It is expected that the EU AI Act will be adopted in the near future, creating a legal framework for the development and application of AI in the European Union that sets a global standard.

Timeline and Implementation: When Must You Act?

The schedule for introducing the EU AI Act is a complex process spanning several phases. After the provisional agreement in December 2023, the Act is now passing through the final stages of the EU legislative procedure. It is expected that the EU AI Act officially entered into force on August 1, 2024. This marks the beginning of a new era in the regulation of Artificial Intelligence in Europe.

Entry into force: The clock starts ticking for compliance preparations.

Prohibitions apply: Bans on 'Unacceptable Risk' AI (e.g., social scoring, dark patterns).

GPAI Rules: Regulations for General Purpose AI models (like GPT-4) become effective.

Full Application: Rules for 'High-Risk' systems apply fully.

Although the EU AI Act enters into force in mid-2024, this does not mean all provisions are immediately applicable. Implementation takes place gradually. Immediately after entry into force, preparations for establishing the European AI Office begin. Six months later, the first bans come into effect, including the prohibition of social scoring and certain biometric identification systems. After 12 months, rules for providers of foundation models and generative AI become effective. The main provisions for high-risk AI systems come into force 24 months after entry into force. Finally, after 36 months, the remaining provisions become applicable.

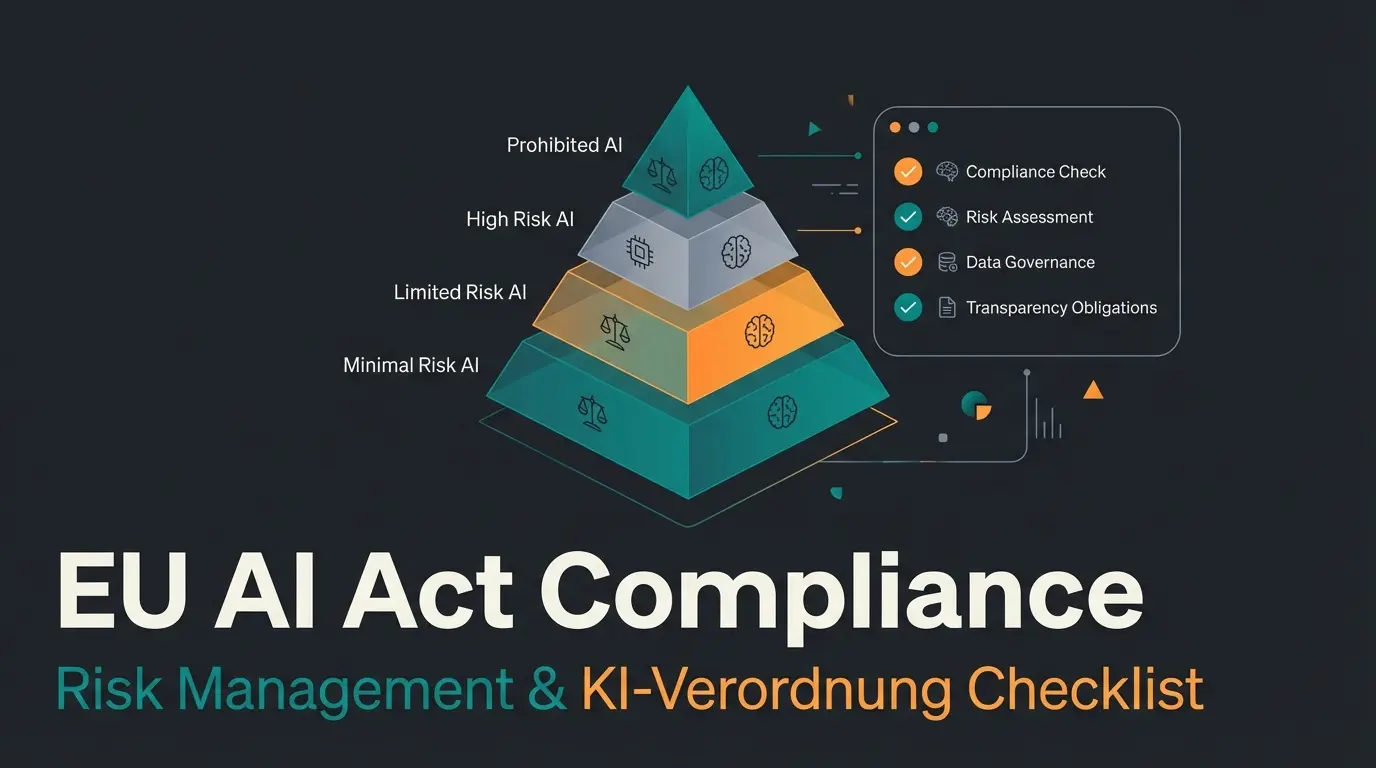

The 4 Risk Levels: Where Does Your Bot Stand?

The EU AI Act is a groundbreaking law designed to regulate the development and use of artificial intelligence in the European Union. It is based on a risk-based approach that classifies AI systems into different categories and sets corresponding requirements. Understanding where your specific software—whether it's a product advisor or a customer service bot—fits is essential.

1. Unacceptable Risk (Prohibited)

The Act explicitly prohibits certain AI applications considered incompatible with EU values and fundamental rights. These forbidden practices include:

- Social scoring by governments

- Untargeted scraping of facial images from the internet or CCTV footage

- Emotion recognition in the workplace and educational institutions

- AI systems that manipulate human behavior to circumvent free will (Dark Patterns)

Crucial for E-Commerce: This last point is vital. Your sales AI must focus on persuasion based on facts, not manipulation using subliminal techniques.

2. High-Risk AI Systems

AI systems classified as high-risk are subject to strict requirements. These include systems used in critical infrastructure, education, employment, law enforcement, and justice. Requirements include comprehensive risk assessment, high data quality, detailed documentation, and human oversight. Most standard e-commerce product advisors do NOT fall into this category unless they are used for credit scoring.

3. Limited Risk (The Sweet Spot for Chatbots)

The EU AI Act places great emphasis on transparency, particularly for AI systems with limited risk, such as AI Chatbots. Providers of such systems must fulfill specific information obligations:

- Disclosure that the user is interacting with an AI system.

- Information about the capabilities and limitations of the system.

- Warning about potential risks, such as the generation of misinformation.

4. Minimal Risk

This covers the vast majority of AI systems currently in use, such as spam filters or AI-enabled video games. These systems are largely unregulated.

Ensure your product consultation AI meets EU standards while boosting conversion. Get our compliance checklist.

Get the Checklist"Limited Risk" and Transparency: The Opportunity for Sales AI

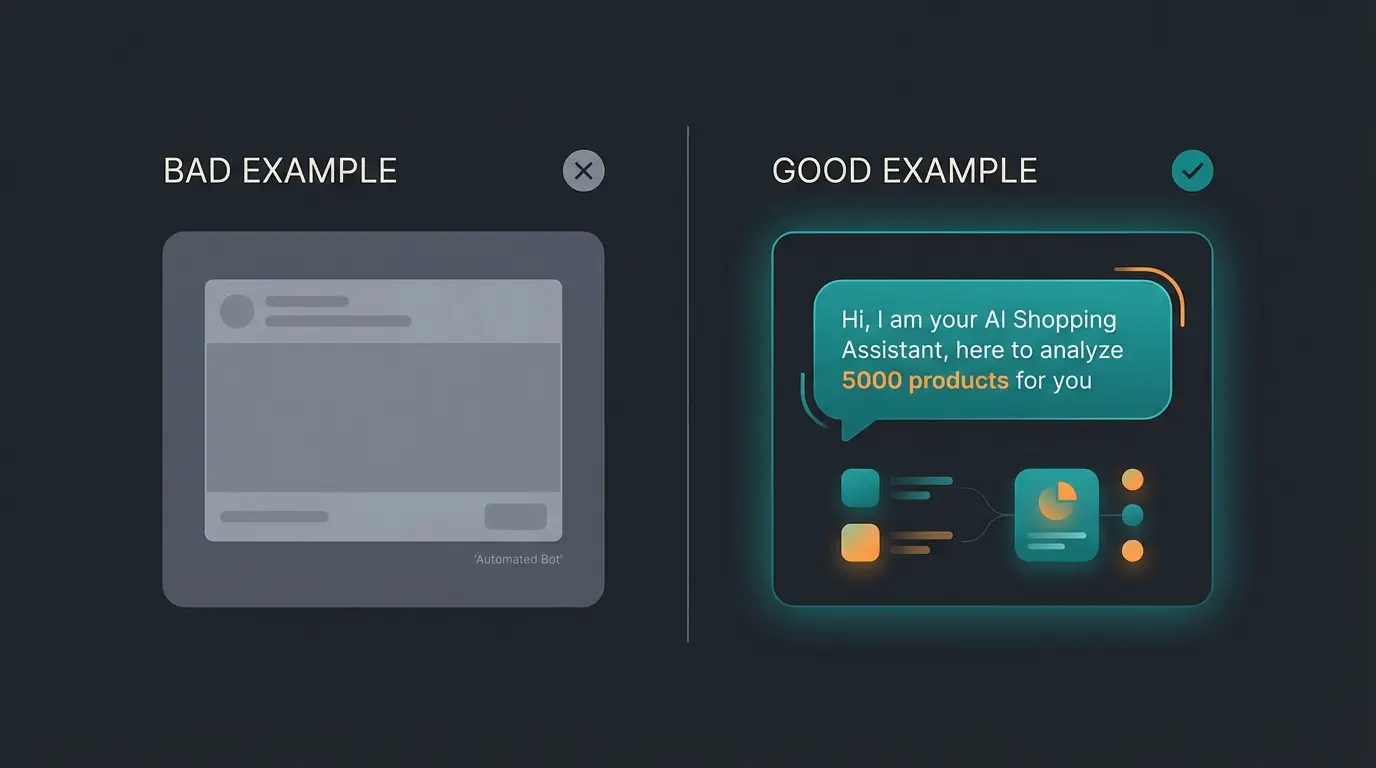

Most companies fear the "Transparency Obligation" under Article 50. They worry that labeling a chatbot as an "AI" will scare away customers. However, in the context of product consultation, the opposite is often true. Transparency can be a massive driver of trust.

Turning Compliance into Conversion

Instead of a dry legal warning stating "This is a bot," successful e-commerce brands use value-driven disclosures. For example: "I am an AI assistant trained to analyze 5,000 laptops to find your perfect match." This satisfies the legal requirement to disclose non-human interaction while simultaneously highlighting the super-human capability of the tool (speed and data volume).

| Standard Bot (Compliance Focus) | Sales AI (Trust Focus) |

|---|---|

| "I am an automated system." | "I am your AI Shopping Assistant." |

| Hidden in a footer or small print. | Clear welcome message explaining value. |

| Focuses on liability. | Focuses on capability and assistance. |

Manipulation vs. Persuasion: Drawing the Line

A unique challenge for sales-focused AI is the prohibition on manipulative techniques. The EU AI Act bans AI that uses "subliminal techniques" to distort behavior in a way that causes harm. Where does aggressive sales persuasion end and manipulation begin?

Ethical Product Consultation by AI stays safe by focusing on rational features and user needs rather than emotional manipulation or dark patterns. If your AI helps a user filter products based on their stated budget and preferences, it is helpful persuasion. If it artificially creates urgency (e.g., "Only 1 left!" when there are 100) or exploits vulnerable user states, it crosses into prohibited territory.

Impact on Businesses Using AI

The EU AI Act will have far-reaching effects on companies developing or deploying AI systems. The new regulations require careful adjustment of processes and strategies to ensure compliance while driving innovation.

Necessary Adjustments in Development

Companies must review and adapt their AI development processes to meet EU AI Act requirements. This particularly affects the development of high-risk AI systems, for which strict guidelines apply. These include ensuring high data quality and governance, guaranteeing transparency and traceability of AI decisions, and integrating human oversight into AI operations. These adjustments may require significant resources but also offer the chance to develop more robust and trustworthy AI systems.

Examples of Affected AI Applications

The EU AI Act will impact various AI applications that are already widespread or gaining importance. Some examples include:

- [AI Employees](/blog/ai-employees-revolutionizing-workplace): Virtual assistants and AI-based decision support systems must be designed transparently and fairly, with clear mechanisms for human oversight.

- [AI-based Product Consultation](/product-consultation-via-ai): Systems for automated product recommendations must ensure they do not provide discriminatory or misleading recommendations.

- [AI in Customer Service](/customer-service-via-ai): Chatbots and automated customer support systems must clearly communicate that they are AI-based and enable simple escalation to human employees if needed.

These examples illustrate how comprehensively the EU AI Act will intervene in existing AI applications and what adjustments companies must make to remain compliant.

Pros and Cons of the Regulation

The EU AI Act has far-reaching implications for the development and use of AI technologies. As with any comprehensive regulation, there are both advantages and potential challenges.

Advantages for Consumers and Society

The EU AI Act offers numerous benefits for consumers and society as a whole: increased protection of fundamental rights and privacy, more transparency in AI-supported decisions, promotion of trustworthy and ethical AI, harmonized standards across the EU, and potential strengthening of consumer trust in AI technologies. These benefits can lead to a more responsible and trustworthy development and use of AI technologies, as discussed in the context of the AI Revolution.

Potential Challenges for Companies

Despite the benefits, companies face challenges in implementing the EU AI Act: increased compliance effort and costs, possible restrictions on innovation, complexity in classifying AI systems, necessity of adapting existing AI systems, and potential competitive disadvantages compared to non-EU companies. These challenges could be significant, especially for smaller companies and start-ups.

Cheatsheet: Best Practices for Preparation

To support companies in navigating the EU AI Act, we have summarized the most important points in a clear checklist. By taking a proactive approach, companies can not only ensure compliance but also achieve competitive advantages. The full text of the EU AI Act offers detailed information on all requirements.

Or up to 7% of global turnover for prohibited AI practices.

Time given for high-risk AI systems to become fully compliant.

Conclusion: The Future of AI Under the EU AI Act

The EU AI Act marks a decisive turning point in the regulation of artificial intelligence. As the world's first comprehensive AI legislation, it sets new standards for the responsible handling of this transformative technology. Through its risk-based approach, the Act creates a framework that promotes innovation while protecting fundamental rights and EU values.

For companies, the EU AI Act represents both a challenge and an opportunity. While strict requirements for high-risk AI systems require adjustments in development and operations, they also offer the opportunity to strengthen consumer trust. The transparency obligations for providers of low-risk AI systems promote a more open and responsible handling of AI in society.

At the same time, the Act places Europe at the forefront of global AI regulation. It could serve as a blueprint for similar legislation worldwide, significantly influencing international discourse on AI governance. This offers European companies the chance to position themselves as pioneers for trustworthy and ethical AI. With the rapid development of AI technologies like GPT-5, continuous adaptation of the regulatory framework will be necessary. Companies should therefore not only focus on compliance but proactively participate in shaping responsible AI practices.

Yes, but usually under the 'Limited Risk' category. This means you generally don't need a complex risk assessment, but you must strictly follow transparency rules (Article 50), ensuring users know they are communicating with a machine.

Penalties are severe. Violations of prohibited practices can result in fines of up to 35 million euros or 7% of global annual turnover, whichever is higher.

While the law entered into force in August 2024, most rules relevant to businesses (like General Purpose AI governance) apply from August 2025, with full application by August 2026. However, prohibited practices are banned starting February 2025.

Yes, personalized recommendations are generally allowed and fall under minimal or limited risk, provided they do not use subliminal techniques to manipulate behavior or exploit vulnerabilities (Dark Patterns).

Don't let regulation slow you down. Implement a high-converting, fully compliant product consultation AI today.

Start Free Trial